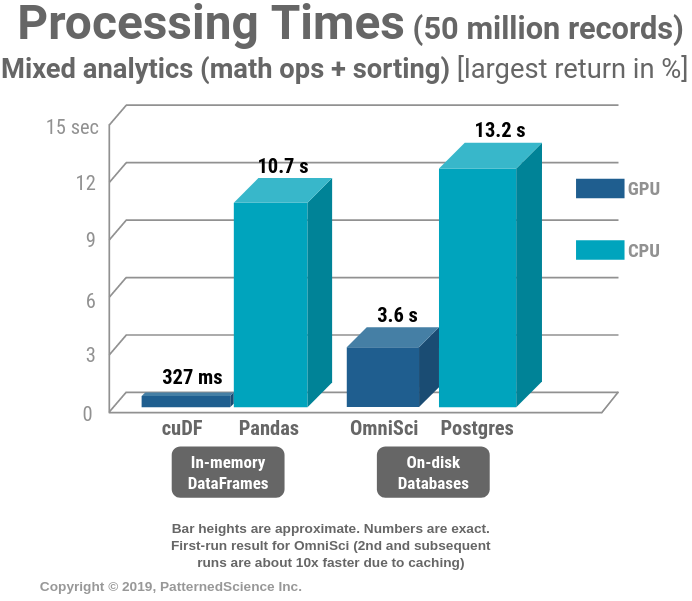

Talk/Demo: Supercharging Analytics with GPUs: OmniSci/cuDF vs Postgres/ Pandas/PDAL | Masood Khosroshahy (Krohy) — Senior Solution Architect (AI & Big Data)

Panda RGB GPU Backplate Custom Made for ANY Graphics Card Model now with Vent Cut Outs and ARGB (Addressable LEDs) - V1 Tech

NVIDIA's Answer: Bringing GPUs to More Than CNNs - Intel's Xeon Cascade Lake vs. NVIDIA Turing: An Analysis in AI

Canadian Trademarks Details: GPU Global Pandas Union & design — 1725065 - Canadian Trademarks Database - Intellectual property and copyright - Canadian Intellectual Property Office - Innovation, Science and Economic Development Canada

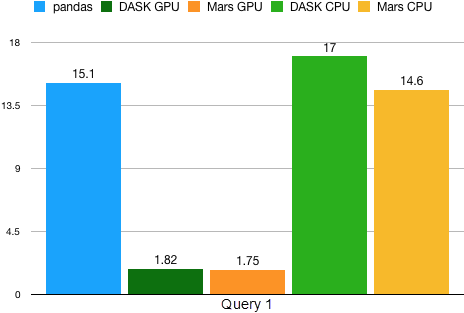

GitHub - patternedscience/GPU-Analytics-Perf-Tests: A GPU-vs-CPU performance benchmark: (OmniSci [MapD] Core DB / cuDF GPU DataFrame) vs ( Pandas DataFrame / Postgres / PDAL)

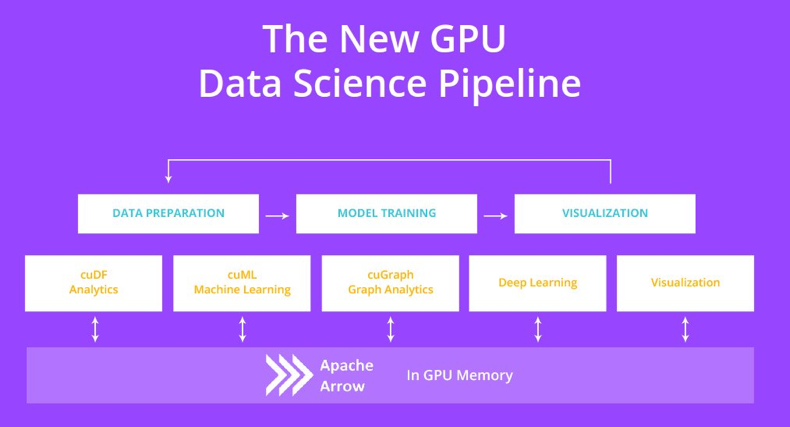

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog